A significant part of my interest in the Nerf blaster hobby is the interesting data sets that can be collected through velocity and accuracy testing of different Nerf blasters and blaster configurations. The modification aspect of the hobby injects a seemingly endless combination of variables across a wide array of Nerf blaster platforms. I started looking at chronographs as a cool source of data for interesting visualizations that summarize the statistics around dart velocities and accuracy / spread analytics at distances. This page summarizes my ongoing work on the subject. Check back regularly for updates!

TLDR warning

This is a long blog post intended for serious Nerf blaster enthusiasts that are interested in a methodology and statistical analysis discussion in fps and accuracy profiling of blasters. I’ve spent time pulling together examples of why you should alter your chronograph result reporting techniques slightly to better represent the performance statistics of a given Nerf blaster. I suspect most readers are not interested in this deep of a study. You can look at individual blaster pages for shorter summaries.

Hopefully you’ll stick around. This is a pretty cool topic.

Statistics – why should I care?

Hey I can take 5 or 10 shots, grab and average, and I’m good to go, right? Well, no. Let’s take a look at a few examples of why we need to care about concepts like statistically significant populations and confidence intervals. Let’s start with this sequence of 10 x 10 shot data sequences. Here are the average values for each of the 10 shot sequences:

You might look at this and think there’s a Nerf blaster in there at 112 that is outperforming the rest. However what you are really looking at are 10 random samples of shots from a 100 shot population from the same blaster profiling effort. What you are missing from this view is the concept of confidence. If I calculate 95th percentile confidence intervals for each average pictured above, I get a clearer picture of what these values represent:

The vertical gray bars represent the 95th percentile confidence intervals around the mean for each population of 10 shots. First this shows us that there’s pretty high variability in this particular Nerf blaster’s performance. The standard deviation for the 100 shot population was 17 fps on a population average of 102 fps. What’s interesting about this plot is you don’t get an idea of the variability of this particular blaster configuration (it was actually the class of darts in use that led to the wide variability in results) from the first data point which showed very consistent results through that sample set.

The other extreme is using a well designed platform like the Caliburn. That blaster with some specific darts can yield extremely reproducible results with standard deviations of ~2.6% (the vertical scale on the bars is consistent with prior examples):

This was the most consistent configuration I have ever tested, and even then, we have a high of 174 and a low of 168 for our sampled means. I’ve seen some people refer to these as being “slightly different” when, if you broadened the population and looked at errors, they are statistically the same.

Here’s the same blaster with a different dart that yields a standard error of ~5.6%:

This error rate is pretty common in the Nerf blasters I have profiled. Note the high of 176 and low of 167. If you look at the entire population of 100, the average is 172 +/- 19 (95th percentile, 2 sigma).

Confidence (WIP)

This was a particularly extreme case with a high standard error, 1 sigma or 1 standard deviation. The 95th percentile confidence intervals are 2 sigma, or 2 standard deviations.

- 1 sigma ~67% confidence

- 2 sigma ~95% confidence

- 3 sigma ~99% confidnece

Sample size (WIP)

You can get away with using relatively small sample sizes for Nerf blaster profiling.

Significant Digits (WIP)

This leads me to my final recommendation. The first was use larger shot populations. I prefer 30 for a study as a minimum. Show your confidence interval – it should be stated as a “+/-” value following your average. It’s easily calculated by multiplying your standard deviation by two.

Finally consider your significant digits. I’ve seen a few YouTubers report averages on 5 shot sequences of figures like “202.5 fps versus 181.3 fps for a difference of 21.2 fps”. We know from the prior discussion there’s no way anyone could be this precise, with fractional fps numbers. We’ve seen standard errors of 2% at best in my examples, ranging up to 17% for some Nerf blaster / dart combinations. That’s standard error for a population (spread) – we’re really after 2 sigma. At best these figures should have been reported as “202 versus 181 for a difference of about 20 fps, or ~10%“.

Don’t report decimals, even if your chronograph does. You will look silly.

I’ve recently viewed a YouTube video where the chronograph averages of a few shots were reported with 5 significant digits (5!!!). Remember our plot sequence of a random 10 shot sampling of a high standard deviation population? Let’s add 5 sigfigs to that:

The decimal places are just silliness. Even if your chronograph presents the data in that format, it’s up to you to correct it.

Cross comparing chronographs

My initial testing focused on a Caldwell brand chronograph. After testing a variety of Nerf blasters using different configurations, my methods seemed to yield readings that were significantly lower than what other hobbyists were reporting. At this point I decided to take my project in a new direction, focusing on different chronographs and different testing conditions as a foundational component to my work. This section details the equipment I’m using and the different conditions I’m testing under.

Equipment

Caldwell Ballistic Precision Chronograph

The first chronograph I purchased was this one from Caldwell. It is a solid entry level chronograph that works out of the box with your iPhone / Android. It collects and archives shot sets that you can email, text or place on a cloud drive. This feature is immensely helpful when dealing with large shot counts per data set (e.g. the standard 100 round samples I’m taking for my tests). This is my entry level chronograph of choice because of this feature.

Key specifications:

- 5 to 9,999 feet per second velocity range

- +/- 0.25% accuracy

- 20-120 degrees Fahrenheit operational temperature range

- One 9-volt alkaline battery (not included)

Competition Electronics ProChrono Digital

I purchased a Competition Electronics ProChrono Digital to validate the results I was getting with the Caldwell Ballistic Precision. I found the construction of this unit to be a bit flimsier than the Caldwell, and when you add in the cost of the bluetooth unit that allows you to easily offload large data sets from the chronograph, its about 50% more expensive. If I were recommending an entry level chronograph, I’d recommend the Caldwell over this unit. That said, it helps to have multiple units around for testing purposes, so there is value to me in having this chronograph in my collection.

Key specifications:

- 21-7,000 feet per second velocity range

- +/- 1% accuracy

- 32-100 degrees Fahrenheit operational temperature range

- One 9-volt alkaline battery (not included)

Caldwell Chronograph Light Kit

I picked up a lighting kit from Caldwell for testing indoors. This unit can also be used for testing with the Competition Electronics chronograph I purchased.

Video and software analysis

Another interesting alternative to chronographs is the combination of video recordings and an open source software project that tracks projectiles on video. I’ll be testing out this combination as well to compare to the chronograph readings I’m taking. Since this is a highly manual process I don’t foresee using this method on a broad scale, but we will see.

Doppler based devices

I’ve also been investigating doppler based chronographs but their minimum fps ranges vary from 70 to 100 fps. This would rule out stock blasters to blasters with entry level modifications, but higher end modifications and homemade Nerf blasters would be within their minimum velocity ranges. One unit in particular, LabRadar, seemed really interesting because it tracks a projectile down range monitoring velocity along the way. It comes with a hefty price tag of $560, but a combination of velocity tracking coupled with some kind of external spread monitoring could be an interesting solution.

A note on batteries

I’ve experimented with Alkaline, NiMH rechargeables, and Li-ion without any significant impact on results. I’ll continue to experiment with different batteries and note if there are any long term impacts during chronograph sessions.

Conditions

Indoor

I do most of my testing indoors to keep temperature and humidity consistent across data collection efforts. The chronograph is placed on a bar table in a bay window. The indoor lighting kit is used to ensure adequate lighting is provided to the sensors. A sterilite bin is placed vertically behind the chronograph to act as a catch bucket and to protect the walls. A towel is taped to the front upper brim of the bin to help slow the darts. For long range tests a trifold is used as a larger backstop.

The muzzle of the Nerf blaster is placed within a few inches of the chronograph. I generally record these as “within 1 ft” due to some of the blaster designs where the actual barrel of some blasters is set back within the blaster itself which places it farther away from the chronograph. This is the case for some of the stefan dart kit based blasters like the two I have containing a Worker Stefan kit and the Artifact Punisher kit.

Distance profiles are collected at 6ft intervals. I can extend this range across the house to about 48 ft giving me a total profile of 1, 6, 12, 18, 24, 30, 36, 42 and 48 foot distances, for a total of 9 ranges.

Outdoor

I’m still working on standardizing an outdoor method. Right now I’m collecting spot data with a few shots around the yard in different lighting conditions to quickly compare to my indoor readings which are my primary collection method.

Statistical concepts in use

I’ll be using a variety of basic statistical concepts and plotting techniques in this post. This section walks you through those methods and my opinions on how I prefer to profile Nerf blasters and darts.

Populations

The foundation of any data analysis starts with the data that we collected. You’ll read references to “populations” frequently in my posts. I’m talking about a series of readings collected on the chronograph in a profiling session. I differ from a good bit of the hobby in my target population count; statistical analysis on small populations like 10 shots is a quick but imprecise way to profile a Nerf blaster. Typically you will want to target a minimum population of 30 shots for a statistical analysis. I collect 100 in most of my profiling sessions so I can take a broader look at the data set.

Stats

Most chronographs will calculate some basic statistics for you within their shot recordings. You can use these stats for a quick analysis, but I prefer using the remote collection capabilities of the Caldwell to record readings from a large shot population, load the data into Excel, analyze and plot. Typical concepts that I will refer to are:

- Average (mean)

- Standard deviation (1 sigma)

- Standard Error

- 95th percentile confidence interval (2 sigma) of the population

- 95th percentile confidence interval of the mean

- 25th percentile

- 50th percentile (median)

- 75th percentile

- min

- max

- normal distribution

Most of these statistics can be represented in a hybrid scatter / box plot. These figures are really useful in comparing more than just an average – we can look for skews in a population, and easily see the spread of fps ranges recorded.

Populations as a function of distance

I mentioned a 100 shot population for most studies. The basis for this selection was for distance profiling of Nerf blasters where I could obtain fps readings as the accuracy of the blaster dropped off and I registered fewer hits per 100 shots as the distance increased between the blaster and the chronograph. I may have to increase this distance as I push further out to the 48 foot limit of my indoor range, so you may see a mix of studies based on 100 shots or more.

Plotting techniques

I’m a big fan of Tufte‘s information design principles. Key concepts he advocates include using earth tones so viewers can focus on the data and removing unnecessary clutter from your graphics, every line / color / text should mean something. It’s really a minimalist design approach that doesn’t use gaudy color schemes and crazy word art to distract you from the data itself. The presentation should be simple and engaging, and hopefully beautiful.

Here’s an example of a shot summary from a 100 shot study:

In this case I provide you with the average shot velocity (fps) with a 95th percentile confidence interval for the population. I’m more interested in the population confidence interval than the confidence interval of the mean. This seems like a subtle difference, but the population confidence interval is more interesting to me in this particular application because it sets an expectation of where any given shot may fall from that Nerf blaster around the mean. This helps us convey the confidence of our spread for a specific configuration.

You can see other subtle graphic inclusions in the plot that, once you understand how to read it, become quite useful when comparing a series of these plots. The plot includes:

- a scatter plot of the 100 shots in the study represented by the vertical gray data points.

- the average or mean of the population represented by the largest horizontal gray bar.

- the median / 50th percentile of the population represented by the white circle outlined in gray.

- the minimum and maximum velocities represented by the horizontal gray bars labeled min and max.

- the 25th and 75th percentile values represented by the horizontal gray bars, sometimes hidden behind the mean and median.

- I may update this to include a visual representation of the 95th percentile confidence interval.

Comparing chronographs

Chronographs from different vendors yield different results. Our chronographs are precise, but the lingering question is “are they accurate?” I’ll walk you through profiling two chronographs with the same blasters and foam, illustrating how your chronograph results will vary.

Caldwell Ballistic Precision Chronograph

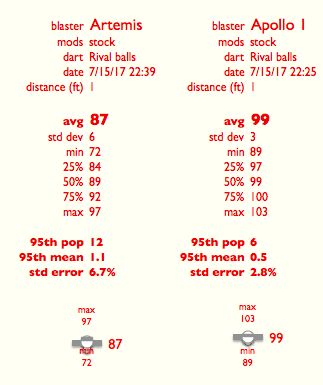

First up is the Caldwell profiled with three Nerf Rival blasters, an Artemis and two different Apollo blasters. The Apollos yielded very consistent results, more consistent than any blaster I have ever profiled.

Competition Electronics ProChrono

I ran the Artemis and the first Apollo through the CEP, using my Caldwell indoor light kit (the same one used with the Caldwell chronograph, on the same bench, under the same conditions). The results are summarized below.

Discussion of the chronograph readings

- The Apollos were incredibly reproducible in their velocities. I chronographed both units on the CBPC, firing 100 shots each. Apollo 1 yielded 102 +/- 6 fps, while Apollo 2 yielded 102 +/ 4 fps. This was in range with velocities I have seen reported by others. I had not seen consistency like this from other blaster platforms in my work, so my current thinking is I will use these for a documenting the reproducibility of my setup as a long term reference blaster.

- The Artemis came in lower at 92 +/- 12 fps on the CBPC (I’m providing 95th percentile confidence intervals for the populations here). This too was in range for what other folks have reported.

- The CEP yielded 99 +/- 6 fps for the Apollo 1, and 87 +/- 12 for the Artemis. Looking at the confidence in the mean, the intervals are 99 +/- 0.5 (CEP) versus 102 +/- 0.5 (CBPC) for the Apollo 1, and 87 +/- 1.1 (CEP) versus 92 +/- 1.1 (CBPC). So the CEP is reporting 5 fps lower for the Artemis, and ~3 fps lower for the Apollo. This was interesting to note the variability in readings potentially introduced by the chronograph in use.

- After 500 Rival rounds, I ran 100 Worker stefans through my Caliburn and got 170 +/- 19 fps for the population (170 +/- 1.9 for the confidence in the mean). I saw more variability this time after I had a jam and pinched a stefan in the barrel with the ram, so I’ll have to take a closer look at what I may have done to drive up the confidence band around the population, but the average I saw were in line with my prior results.

Here is a summary of the full bench session which also included the Caliburn run.

Using a reference blaster

A concept that we often use in lab sciences is that of a reference material or configuration. This allows us to take a known standard and profile it on a regular basis to measure the consistency over time of our test configuration. Things that we need to consider are:

- How consistent is the reference item, in this case a blaster.

- How close is reference blaster to the fps ranges of the blasters we are profiling?

We can use this reference blaster for a variety of purposes. One can be a reference standard that we can inject periodically within a run to see if we have any changing test conditions that may be impacting our results. Another strategy can be to “bracket” shorter bench sessions with the reference blaster, again improving our confidence in the methodologies in use by illustrating we obtained the same result with the same blaster at the beginning and end of the session. A third use is long term profiling of our methodologies by monitoring the reference blaster over the course of months.

Selecting a reference blaster

I am continually looking at blaster configurations that yield consistent results. In most cases I have seen entry level Elite blasters yield highly variable results from copy to copy. I’ve seen Retaliators come in at 55 fps new from the store. Other folks report them in the high 60’s to low 70’s. This is a pretty wide range for a reference blaster.

Given how Nerf has targeted Rival for more elite game play, that line may be more promising in terms of yielding consistent results from copy to copy. I picked up two Nerf Rival Apollos during a recent Amazon sale, and started investigating those blasters. I will cover the results in the next section.

Bench session with a reference blaster

Here’s a summary graphic from a bench session with a Nerf Rival Apollo as a reference blaster bracketing a series of X Zeus 2 magazine and dart profiling runs.

Note how the same results were obtained with the Apollo before and after X Zeus testing; 101 +/- 6 fps and 101 +/- 5 fps respectively. This tells us our chronograph, in this case the Caldwell, yielded consistent results with our reference blaster, which also matched up to prior bench sessions where this blaster yielded 102 +/- 5 fps. I can conclude that my Caldwell yielded consistent results over the course of the bench session, and yielded consistent results week to week.

Though the use of a reference blaster adds more time to an individual bench session, it will provide added confidence in reported results, increasing the credibility of both the results and the person producing them.

Long term reference profiling [WIP]

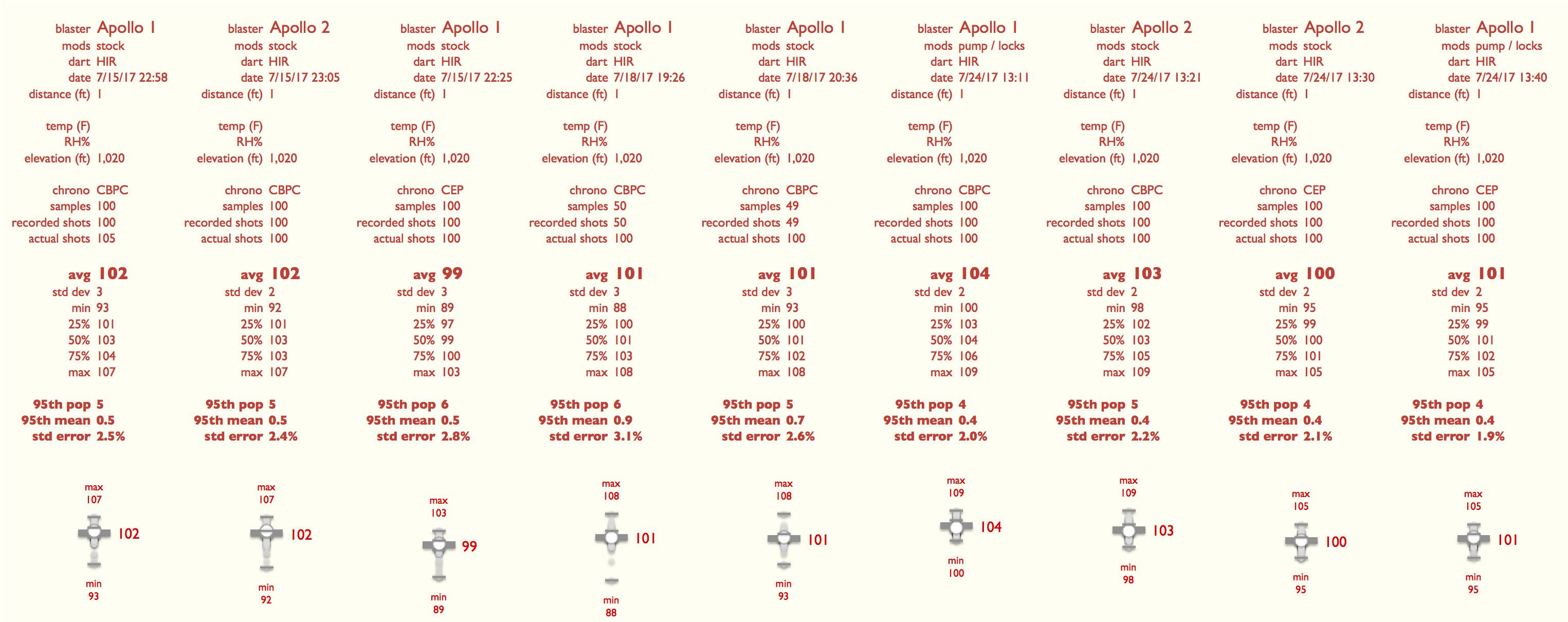

I’m still working on this section, as I’d like to have multiple months of data plotted with the same blaster to illustrate this concept. As of July 2017 I’ve collected 9 sets of samples for the Nerf Rival Apollo. This collection occurred across two different copies of the blaster and two different chronographs.

I’ll be working on a time series summary plot, but for now, here is the full data set:

Results correction

In my opinion, this is a tricky subject. I’ve seen a recent Reddit post where a person corrected down his profiling results at 225 fps by “10%” to 205 fps because a stock blaster yielded results of 80 fps which was about “10%” higher than what other folks typically report for Elite blasters.

I don’t think we have enough data to be confident in that kind of correction. A few things to consider:

- I’ve seen stock blasters on the same model of chronograph used by this Reddit user come in 10 fps LOWER than what the hobby is reporting. So do I push all my values up 15%?

- I’ve measured two Nerf Rival Apollos on the same chronographs that reported Elite blasters at lower fps within ranges typically reported by other nerfers. So now I have data for Elites that are lower and Rivals that are in line with other hobby readings. What do we do?

- We’re profiling stock velocities in the 70 to 100 fps range (or 50 to 100 based on my profiling), but we’re trying to extend those corrections to readings taken in the 200 to 300 fps range. That’s a big gap.

- No reference blaster data was collected at the time of the profiling of the blaster under study. I’ve seen individual blaster performance drift over time, and we know external conditions can contribute to chronograph readings. If we don’t have a solid set of reference data from the time of the test under consideration, we really don’t have an effective way to accurately “correct” a reading.

So what to do? We need more data. We cannot assume that a 10% delta seen on Elite class blasters in the 70 fps range can be applied to any modified or custom blaster recorded at ranges 3 to 4 times higher than the Elites. We really don’t have good data on the consistency out of the box of any given Elite blaster. I have seen Elites yield wildly different results, ranging from 55 fps to 80 fps reported by different folks. This is way too inconsistent to draw conclusions on correction factors using them to correct for custom blasters at far higher ranges.

I’d rather see us spend more time as a hobby profiling specific off the shelf blasters with more precise data to determine if there is a blaster worthy of being used as a reference blaster. In my limited experience, the most promising blaster so far is the Nerf Rival Apollo. I’m going to continue to profile this blaster model, as I think it has promise, but I need readings from additional copies to bolster my confidence in that theory.

We’d also have to target some kind of homemade blaster that is of a cheap, simple design that can achieve 200 fps ranges so we have a blaster that is more in line with the velocity ranges we would like to operate in. In most cases I do not like applying models beyond 2x the range in which they were collected unless I have some very solid evidence this model will scale that far above the data collection range.

Nerf blaster fps profiling (WIP)

That was kind of a long setup before getting to actual profiling data. This is the meat of the data most folks are interested in. We’re now combining the test methodology, statistical analysis and plotting techniques to compare the performance of a variety of Nerf blasters and darts.

Caliburn / Stefan dart profiling

I acquired a Caliburn kit and printed my own parts and assembled my Caliburn magazine fed pump action Nerf blaster in June of 2017. This quickly became my favorite blaster. It’s simply fun to plink with and it’s an amazingly simple yet robust design.

I performed some initial testing with my Caliburn using a variety of 3rd party Nerf-compatible darts. Most of my test darts are short darts – cut down darts that are more accurate than stock full length darts. I tested a variety of darts under the same conditions. I noted the general test conditions in the plot itself, as well as the collection time.

The impressive dart in this run was the one made by Worker. The worker stefans showed an absolutely amazing 5% 95th percentile confidence interval, far superior to any other dart I tested. Though the Worker darts did not hit velocities above 180 fps, they consistently fell in the 170’s and never fell below 158. You can easily see how tight the velocity population was of these stefan darts compared to the other stefan darts under test. The Worker darts may look a little funny, but they are a joy to fire.

Retaliator modification progression

This is an interesting progression of Stefan dart velocities from a base Nerf Retaliator. We start at the left with a stock configuration using a 2.5kg spring, and move through a Stefan conversion coupled with a 7kg spring, followed by a 9kg and 10kg spring upgrade. You can see a steady improvement in dart velocity, culminating with a 135 +/- 15 fps top grouping from the 10kg spring.

I really enjoy working with the Nerf Retaliator. It’s a nice base Nerf blaster that has lots of aftermarket kits that you can use for easy modding. The variety of available springs is nice as well, allowing to tune the fps ranges that you want to target for indoor / outdoor / target shooting.

This data was collected in the Spring of 2017 using my indoor Caldwell setup with a light kit.

Retaliator with Worker / Artifact mods

This plot was a modification of the original progression presented above. My initial aftermarket kit for one of my Nerf Retaliators was from Worker. I wanted to compare that to the much more expensive Artifact Punisher kit to see how well the two breech replacement kits matched up. Interestingly the performance of both stefan kits was similar, which has me leaning toward the Worker kit ($17.50 from Monkee Mods) opposed to the Artifact Punisher ($50 from Monkee Mods, $45 from Tacticool Foam).